TensorFlow is an open source software (OSS) library and platform for machine learning (ML) and deep learning (DL) that was developed by the Google Brain team. It is very popularly used within industry, academia, and research due to its performance, flexibility, and scalability. It allows developers to design and build ML models that are both on cloud-based platforms and edge devices. This includes a wide variety of features such as model training and deployment, data visualization, and distributed computing.

In this chapter, we will be using TensorFlow as a service to run an ML model that we will create and transfer to our ESP32 device instead of letting it run on a centralized system such as the cloud. Note that we will not be discussing ML in detail, as that is out of the scope of the book, but we will talk through the ML model that we will create and how it fits within the context of our deployment while letting you understand the concept of edge computing with this implementation.

We will be running our programs on Python in this chapter; feel free to reference the Python and TensorFlow documentation as well as necessary.

You can access the GitHub folder for the code that is used in this chapter at https://github.com/PacktPublishing/IoT-Made-Easy-for-Beginners/tree/main/Chapter06/.

Edge computing fundamentals and its benefits for IoT

As mentioned previously in the chapter, there are a great many benefits that are derived from edge computing. However, its nature of implementation is different than the paradigms of implementation based on the IoT networks we’ve seen thus far.

In this section, we will be examining the architecture and the benefits of edge computing, understanding more about how components within an edge network perform and where it sits within the overall picture of IoT.

Edge computing architecture

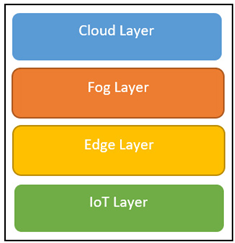

Edge computing architecture is generally composed of four different layers that play a role in the successful deployment of the network. There are two types of processing: near edge and far edge. Near edge refers to processing and data handling that occurs close to the IoT devices, often within the same local network or facility, optimizing for low latency and immediate response. Conversely, far edge involves processing done at more distant nodes, such as regional data centers, balancing the workload by handling fewer time-sensitive tasks and broader data analysis. The following diagram shows the layers in the form of models that make up how edge computing is done:

Figure 6.1 – Four layers of edge computing

We can see what each layer entails next:

Cloud computing: This is based on computing resources that are connected to the end users from a Wireless Local Area Network (WLAN). It provides computing and storage to end users via the WLAN network. This is designed to be an intermediary between the processed data that is sent through and the cloud. This design has higher bandwidth, which will in turn result in lower latency for applications that we are currently running.

Fog computing: Fog computing is a decentralized computing resource that can be positioned anywhere between the end users and the cloud. Based on Fog Computing Nodes (FCNs), which include routers, switches, and access points (APs), it provides an environment that is responsible for having devices within different protocol layers communicate with the FCNs.

Edge computing: Edge computing is designed to operate within or near the radio access network (RAN), but with a distinct role focused on minimizing latency and optimizing data processing. Rather than managing radio resource scheduling like the RAN, which operates at the physical and Media Access Control (MAC) layers, edge computing functions at higher levels, closer to the application layer. It offers a network architecture that, while it can be integrated with RAN infrastructure such as near a radio network controller (RNC) or a similar base station, primarily serves to process data and execute applications near the IoT devices. This is achieved with the aid of an edge orchestrator, which organizes communications within the edge network. The orchestrator also provides information about the network’s performance and the physical location of the computing infrastructure. This setup is crucial for IoT environments, where edge computing handles the data from various devices and sensors located at the network’s periphery, enabling them to exchange information efficiently through the communication network and, depending on their configuration, autonomously control the infrastructure they are embedded in.

Now that we have an idea of the architecture, we can look at the benefits of edge computing.